What is Robots.txt File and How to Create it?

Robots.txt file is a small text file that resides in the Root folder of your site. This tells the search engine bots which part of the site to crawl and index and which part is not.

If you make a slight mistake while editing/customizing it, then the search engine bots will stop crawling and indexing your site and your site will not be visible in the search results.

In this article, I will tell you what is Robots.txt file and how to create a perfect Robots.txt file for SEO.

|

| What is Robots.txt File |

Why Robots.txt is Required for Website

When search engine bots come to websites and blogs, they follow the robots file and crawl the content. But your site will not have Robots.txt file, then search engine bots will start indexing and crawling all the content of your website which you do not want to index.

Search Engine Bots search the robots file before indexing any website. When they do not get any instructions from the Robots.txt file, they start indexing all the content of the website. And if any instructions are found, then following them index the website.

Therefore, for these reasons, the Robots.txt file is required. If we do not give instructions to the search engine bots through this file, then they index our entire site. Also, some such data is also indexed, which you did not want to index.

Advantages of Robots.txt File

- The search engine tells the bots which part of the site to crawl and index and which part is not.

- A particular file, folder, image, pdf etc. can be prevented from being indexed in the search engine.

- Sometimes search engine crawlers crawl your site like a hungry lion, which affects your site performance. But you can get rid of this problem by adding crawl-delay to your robots file. However, Googlebot does not accept this command. But you can set the Crawl rate in Google Search Console. This protects your server from being overloaded.

- You can make the entire section of any website private.

- Can prevent internal search results page from showing in SERPs.

- You can improve your website SEO by blocking low quality pages.

Where does the Robots.txt file reside in the website?

If you are a WordPress user, it resides in the Root folder of your site. If this file is not found in this location, then search engine bots start indexing your entire website. Because search engines do not search your entire website for the bot Robots.txt file.

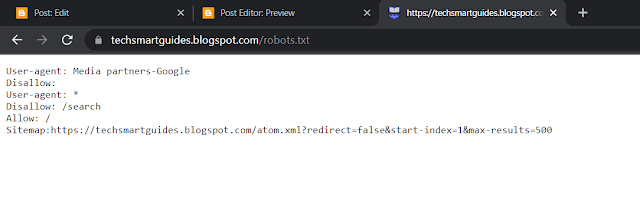

If you do not know whether your site has a robots.txt file or not? So all you have to do is type this in the search engine address bar – example.com/robots.txt

A text page will open in front of you as you can see in the screenshot.

|

| sample Robots.txt File |

This is robots.txt file. If you don't see such a txt page, you will need to create a robots.txt file for your site.

Basic Format of Robots.txt File

The basic format of the Robots.txt file is very simple and it looks something like this,

User-agent: [user-agent name]

Disallow: [URL or page you don't want crawled]

{alertInfo}

These two commands are considered a complete robots.txt file. However, a robots file can have multiple commands of user agents and directives (disallows, allows, crawl-delays, etc.).

- User-agent: Search Engines are Crawlers/Bots. If you want to give the same instruction to all search engine bots, use the * symbol after User-agent: . Eg – User-agent: *

- Disallow: This prevents files and directories from being indexed.

- Allow: This allows search engine bots to crawl and index your content.

- Crawl-delay: How many seconds do bots have to wait before loading and crawling page content.

Preventing All Web Crawlers from Indexing Websites

User-agent: *

Disallow: /

{alertInfo}

By using this command in Robots.txt file, you can stop all web crawlers/bots from crawling the website.

Allowing All Web Crawlers to Index All Content

User-agent: *

Disallow:

{alertInfo}

This command in the Robots.txt file allows all search engine bots to crawl all pages of your site.

Blocking a Specific Folder for Specific Web Crawlers

User-agent: Googlebot

Disallow: /example-subfolder/

{alertInfo}

This command only prevents the Google crawler from crawling the example-subfolder. But if you want to block all crawlers, then your Robots file will be like this.

User-agent: *

Disallow: /example-subfolder/

{alertInfo}

Preventing a Specific Page (Thank You Page) from being Indexed

User-agent: *

Disallow: /page URL (Thank You Page)

{alertInfo}

This will help all crawlers to know your page URL. will prevent it from rolling. But if you want to block specific crawlers, then you write it like this.

User-agent: example

Disallow: /page URL

{alertInfo}

This command will only prevent example from crawling your page URL.

You can comment for any type of question or suggestion related to this article. If this article has proved to be helpful for you, then do not forget to share it!

Also Read- How to Delete Instagram Account ?